Over the past five years, most investors would agree that the economy of the United States, and even the global economy, has been befuddling. Simply put, trends typically derived from economic indicators have frequently led observers astray, leaving Fed members bewildered at the seemingly disjointed state of capital markets. Despite this, major indices (especially tech-heavy indices like the NASDAQ) have seemingly only had one direction to go, and that has been up! Many economists have attributed this to different phenomena such as the reopening of businesses pose lockdown, or the historically low unemployment rate; but anyone who has followed the markets this past year has heard the term “AI craze,” and that might be the most apt way to describe it.

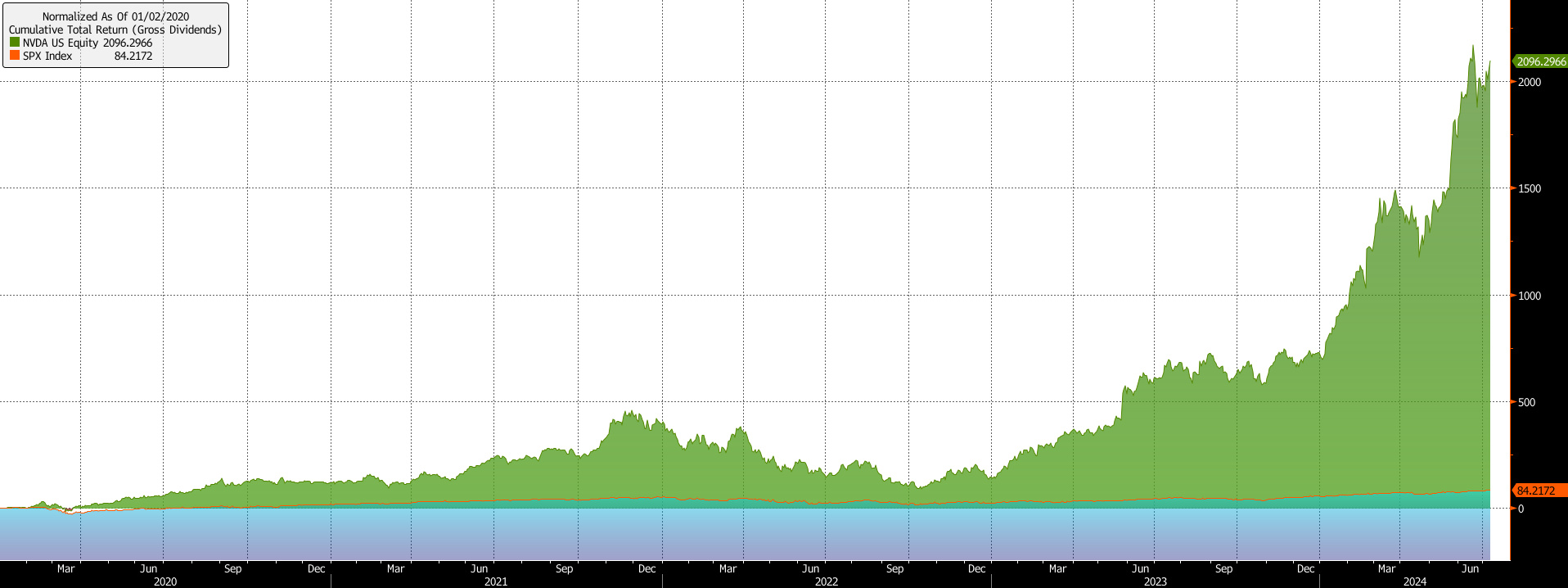

NVIDIA VS S&P 500

ARTIFICIAL INTELLIGENCE VS. MACHINE LEARNING

In the past decade, the rise of artificial intelligence (AI) has sparked an explosive revolution across various industries, pushing the boundaries of innovation and potential economic growth. But what exactly is AI, and how does it differ from machine learning (ML)?

AI refers to the creation of machines capable of performing tasks that typically require human intelligence, such as reasoning, learning and problem-solving. In contrast, traditional ML involves training algorithms on vast datasets to identify patterns and make decisions with minimal human intervention. This distinction is crucial in understanding the profound economic impacts AI and its components have had, and the prospective utilizations.

ML, as a subcategory of AI, operates on the principle of learning from data. By feeding algorithms vast amounts of information, these systems can identify patterns, make predictions, and improve their performance over time without being explicitly programmed for each task. This has led to significant breakthroughs in fields such as image and speech recognition autonomous driving, and offers infights into countless other industries. While this has been a truly amazing breakthrough in technology, many people with knowledge of the industry still do not understand the difference between true AL and ML. Machine learning will accelerate tasks we all do today, but actual AI will be revolutionary.

What are some challenges facing developers of AI today, and how long until I get my very own WALL-E style of garbage collector?

We humans still have much to learn about ourselves before we can replicate our cognitive abilities in a machine. Scientists are not sure exactly how our own brains work, let alone consciousness, but there have been plenty of new theories about our own computing power that have originated from ideas surrounding AI development.

Engineers have tried to create a quasi-neural network using an “agentic” practice. This is a type of AI system designed to act as an autonomous agent, capable of using each separate system as a “voice” in its head so to speak, with a central system that decides which path to take. Just like when you have one voice in your head saying, “eat the cookie, don’t worry about the sugar,” while another voice might say “bur think of how many ab crunches that is!” but you overrule them and eat it anyway because it tastes good.

What are “chips” and what do they have to do with AI?

With these new experiments come increased computing needs, and that is where “chips” come into play. When talking about them, its important to realize what semi-conductor chipsets are and how they work. These “chips” are frankly just a processing unit made for specific purposes. They provide the computational power needed to run Al algorithms and process large amounts of data. They are made using lithographic technology, where engineers design and build small wafers in which they layer levels of silicon, copper and other precious metals to create circuits. Once created, these circuits are grouped together, and code is written to allow the modules to talk. That is the simplified version, and if we really wanted to dig into the nitty-gritty, we might all need an electrical engineering degree.

NVIDIA

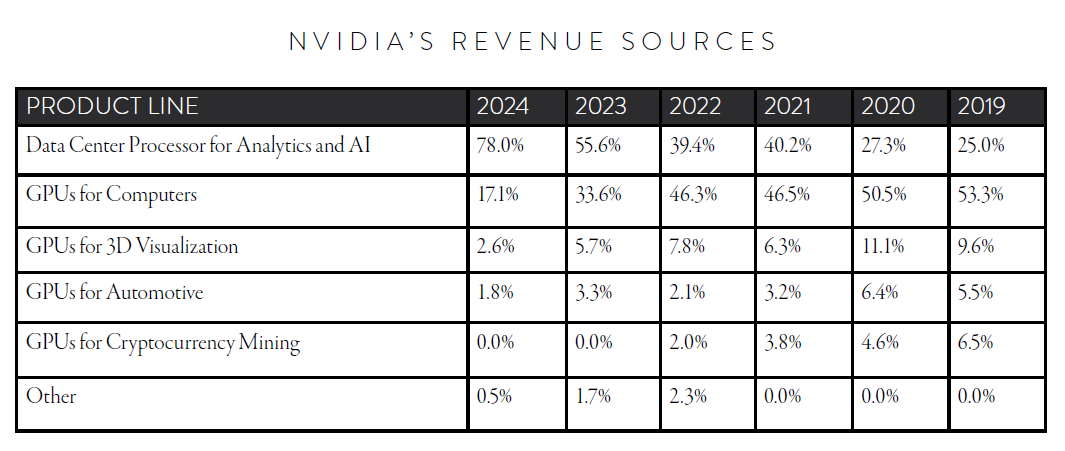

At the forefront of this movement is NVIDIA, a tech giant who has improved on their powerful GPUs (graph processing units),which have become the backbone of AI development. In the not-so-distant past, NVIDIA was a fledging consumer electronics company, selling processors for high-end PCs mostly used for gaming or 3D modeling. Even as recently as 2019, NVIDIA had 53% of all revenue come in the form of computer GPUs. Now it’s only 17.1%. Although, Data Center Processing and AI Analytics have been a business unit since the founder of NVIDIA, Jensen Huang, pushed for its development as early as 2006, it had not seen any widespread interest from large enterprises.

CRYPTOCURRENCY

This all changed following the rise of crypto and the beginning of OpenAI. In the mid-2010s, cryptocurrency became the new asset class on the block(chain), with investors of all kinds flocking to this emerging technology. On the opposite side of the transaction were the crypto “miners” or computer farms that processed all transactions on the blockchain in return for a small monetary reward in the form of newly minted cryptocurrency. ln the beginning, these computer farms used CPUs, or central processing units. Soon miners discovered that the inherent architecture of NVIDIA’s GPUs were superior at processing these types of data and were given their rewards at a much faster rate. This was the first time large enterprises looked to NVIDIA to provide processing for data centers. Subsequently, when OpenAI debuted their new ChatGPT technology in 2022, they knew exactly where to get powerful processing chips to compute their new large language model product. This has caused Data Center Processors and AI Analytics business units to now account for a massive 78% of their total revenues. It is increasingly difficult to comprehend just how fast this company has grown, but to a greater extent how fast the company has shifted its main revenue source.

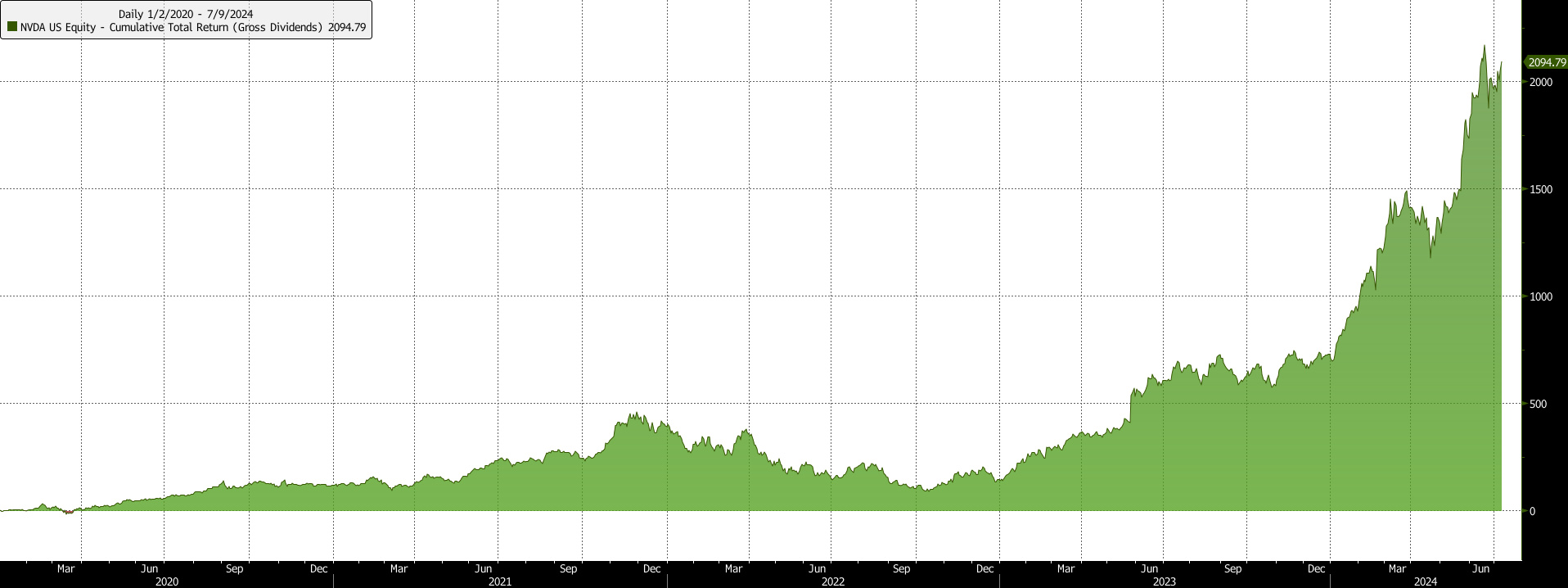

NVIDIA STOCK PRICE GROWTH (2020-2024)

NVIDIA’S MARKET SHARE

From a financial perspective, NVIDIA has been uniquely positioned to capture most of the market share in this space, with a long track record of developing specialized chips. Starting around 2021, the company took advantage of the economic environment and started pushing their Al specialized chips. In 2020, all operating revenue was $10.92B, which grew to $16.68B the next year, hovered around $27B for 2022 and 2023, and ballooned to a massive $60B in the past 12 months. This set alarm bells off for investors, who couldn’t get enough of the stock.

The company’s new market cap briefly made it the largest company in the world and one of the largest holdings in the S&P 500. It continues to defy expectations, beating any top- and bottom-line estimates Wall Street throws its way. But you likely know this. That’s what everyone seems to be talking about at the moment, right?

NVIDIA took off, returning 2,080% since the beginning of 2020, with 153% of that return occurring in 2024 alone.

NVIDIA’S REVENUE SOURCES

Warren Buffet comes to mind during times like these, “To be fearful when others are greedy and to be greedy only when others are fearful.”

While NVIDIA’s revenues and earnings are so far keeping up with expectations, there are only so many times a company can double its size every year. With calculations stretched – not just in the AI space but throughout markets – it could be a very real time to look at alternatives that might better weather a pullback or have more room on the upside.

THE COMPETITION

Several competitors stand poised to capitalize on the rapidly changing AI environment, even if they aren’t as big and flashy as NVIDIA. Advanced Micro Devices (AMD), a long-time rival of NVIDIA, has been lagging due to a technological gap. However, recent developments by AMD, including the release of new “Radeon Instinct” chips, aim to close this gap. AMB holds around 20% of the GPU market share.

Another strong contender is Intel. Traditionally a CPU maker, Intel has recently acquired Habana Labs and MobileEye to enhance its position in the AI space. Habana Labs develops AI processors, while MobileEye specializes in autonomous driving technology.

While not primarily chip companies, both Amazon and Microsoft have also been investing in their own AI capabilities, creating business units to solve or develop internal solutions. Amazon developed its own AI chips, Inferentia and Trainium, to optimize ML on its cloud platform. Microsoft launched a project called “brainwave” that provides real-time AI processing to its Azure cloud platform.

What does all this AI mean for us?

While we aren’t to the point of household robots with personalities, ML has allowed us to begin the development of the foundation we need to get there. This process is largely due to NVIDIA’s breakthrough AI chips, which have propelled the company to market dominance and exponential growth into one of the largest companies on the planet. Only time will tell if it can continue to widen its business moat so to speak, as other companies are catching up. Ask ChatGPT if it knows, as it seems to know everything. Interestingly, ChatGPT has written parts of this article! Let’s see if anyone notices!

This content is part of our quarterly outlook and overview. For more of our view on this quarter’s economic overview, inflation, bonds, equities and allocation read latest issue of Macro & Market Perspectives.

The opinions expressed within this report are those of the Investment Committee as of the date published. They are subject to change without notice, and do not necessarily reflect the views of Oakworth Capital Bank, its directors, shareholders or employees.